Machine Learning

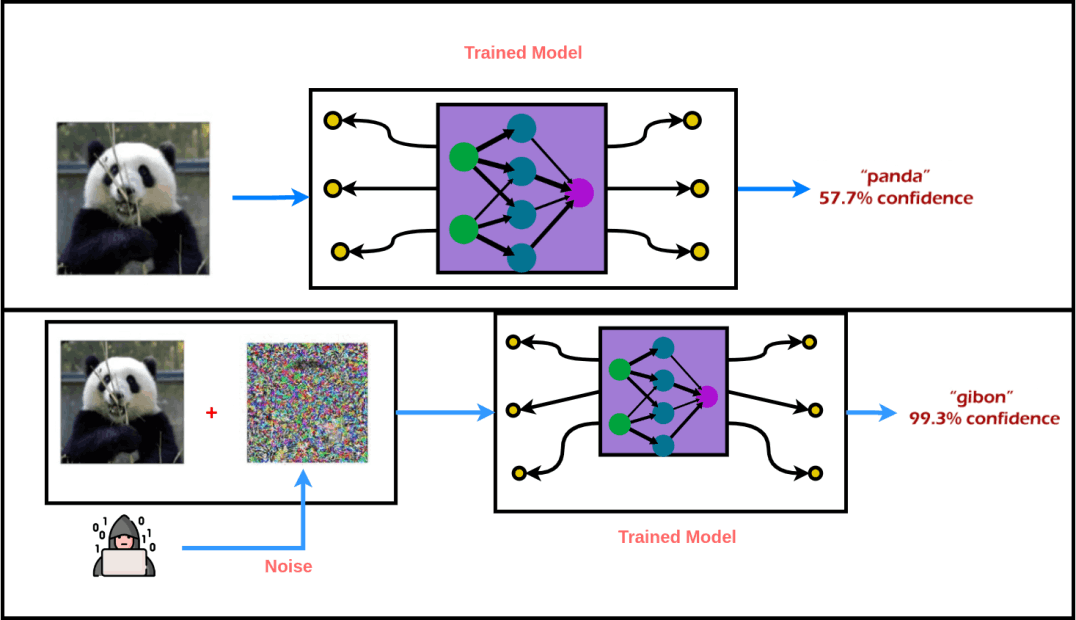

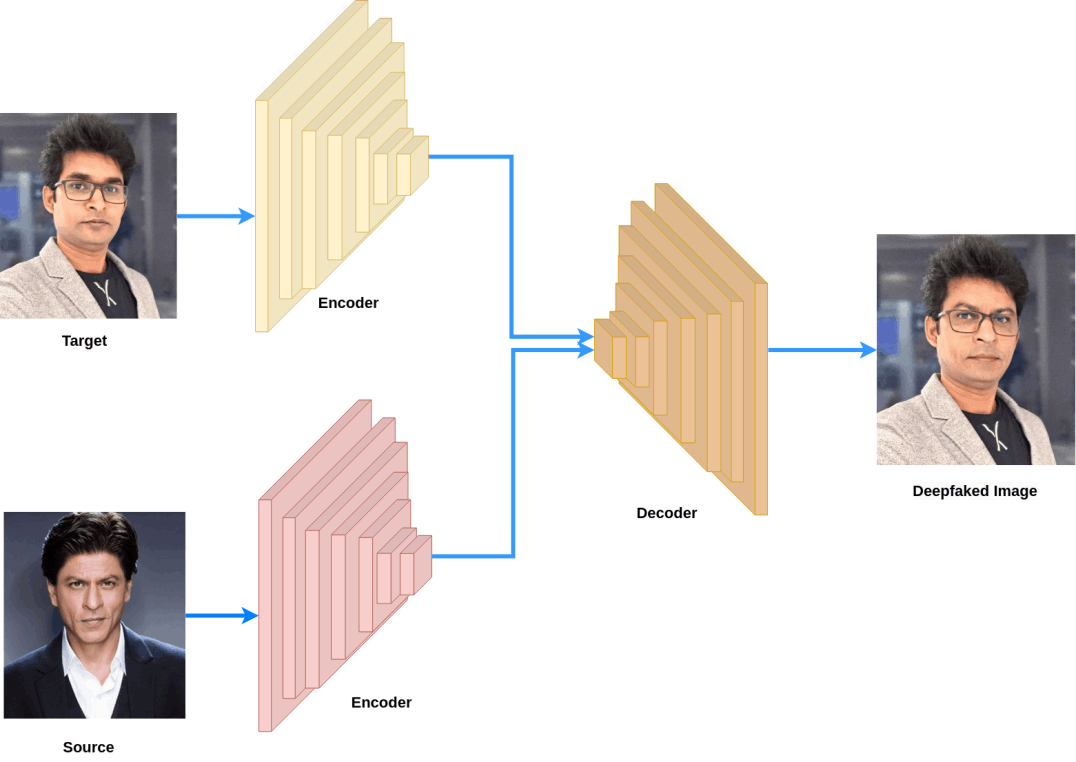

AI and its application have become an integral part of our daily lives, every day it amazes us with new capabilities and products, such as autonomous cars, smart homes, etc. As correctly said “AI is the new electricity” and the contribution of AI is writing a new human history. Dependence on AI is increasing which can easily backfire if AI fails. Our group focused on two security apects of ML: Adversarial Machine Learning and identifying fake media created by an artificial intelligence (Deepfakes).